SpaceNet 4: Off-Nadir Buildings

The Problem

Can you help us automate mapping from off-nadir imagery? In this challenge, competitors were tasked with finding automated methods for extracting map-ready building footprints from high-resolution satellite imagery from high off-nadir imagery. In many disaster scenarios the first post-event imagery is from a more off-nadir image than is used in standard mapping use cases. The ability to use higher off-nadir imagery will allow for more flexibility in acquiring and using satellite imagery after a disaster. Moving towards more accurate fully automated extraction of building footprints will help bring innovation to computer vision methodologies applied to high-resolution satellite imagery, and ultimately help create better maps where they are needed most.

Can you help us automate mapping from off-nadir imagery? In this challenge, competitors were tasked with finding automated methods for extracting map-ready building footprints from high-resolution satellite imagery from high off-nadir imagery. In many disaster scenarios the first post-event imagery is from a more off-nadir image than is used in standard mapping use cases. The ability to use higher off-nadir imagery will allow for more flexibility in acquiring and using satellite imagery after a disaster. Moving towards more accurate fully automated extraction of building footprints will help bring innovation to computer vision methodologies applied to high-resolution satellite imagery, and ultimately help create better maps where they are needed most.

The main purpose of this challenge was to extract building footprints from increasingly off-nadir satellite images. The created polygons were compared to ground truth, and the quality of the solutions were measured using the SpaceNet metric.

Read more about the Challenge winners from our blog!

RELATED BLOGS

- The good and the bad in the SpaceNet Off-Nadir Building Footprint Extraction Challenge

-

The SpaceNet Challenge Off-Nadir Buildings: Introducing the winners

-

Challenges with SpaceNet 4 off-nadir satellite imagery: Look angle and target azimuth angle

-

A baseline model for the SpaceNet 4: Off-Nadir Building Detection Challenge

- Introducing the SpaceNet Off-Nadir Imagery Dataset

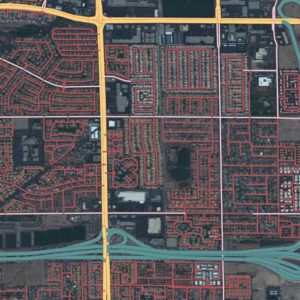

The Data – Over 120,000 Building footprints over 665 sq km of Atlanta, GA with 27 associated WV-2 images.

This dataset contains 27 8-Band WorldView-2 images taken over Atlanta, GA on December 22nd, 2009. They range in off-nadir angle from 7 degrees to 54 degrees.

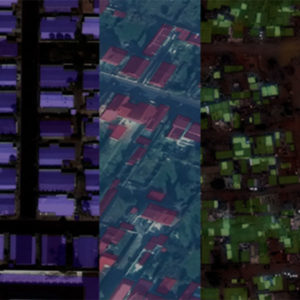

For the competition, the 27 images are broken into 3 segments based on their off-nadir angle:

- Nadir: 0-25 degrees

- Off-nadir: 26 degrees – 40 degrees

- Very Off-nadir 40-55 degrees

The entire set of images was then tiled into 450m x 450m tiles.

See the labeling guide and schema for details about the creation of the dataset

Catalog

aws s3 ls s3://spacenet-dataset/spacenet/SN4_buildings/

Sample Data

2 Samples from each Off-Nadir Image – Off-Nadir Imagery Samples

To download processed 450mx450m tiles of AOI_6_Atlanta (728.8 MB) with associated building footprints:

aws s3 cp s3://spacenet-dataset/spacenet/SN4_buildings/tarballs/summaryData.tar.gz .

Training Data

SpaceNet Off-Nadir Training Base Directory:

aws s3 ls s3://spacenet-dataset/spacenet/SN4_buildings/tarballs/train/

SpaceNet Off-Nadir Building Footprint Extraction Training Data Labels (15 mb)

aws s3 cp s3://spacenet-dataset/spacenet/SN4_buildings/tarballs/train/geojson.tar.gz .

SpaceNet Off-Nadir Building Footprint Extraction Training Data Imagery (186 GB)

To download processed 450mx450m tiles of AOI 6 Atlanta.

Each of the 27 Collects forms a separate .tar.gz labeled “Atlanta_nadir{nadir-angle}_catid_{catid}.tar.gz”. Each .tar.gz is ~7 GB

aws s3 cp s3://spacenet-dataset/spacenet/SN4_buildings/tarballs/train/ . --exclude "*geojson.tar.gz" --recursive

Testing Data

AOI 6 Atlanta – Building Footprint Extraction Testing Data

To download processed 450mx450m tiles of AOI 6 Atlanta (5.8 GB):

aws s3 cp s3://spacenet-dataset/spacenet/SN4_buildings/tarballs/SN4_buildings_AOI_6_Atlanta_test_public.tar.gz .

The Metric

In the SpaceNet Off-Nadir Building Extraction Challenge, the metric for ranking entries is the SpaceNet Metric.

This metric is an F1-Score based on the intersection over union of two building footprints with a threshold of 0.5

F1-Score is calculated by taking the total True Positives, False Positives, and False Negatives for each nadir segment and then averaging the F1-Score for each segment.

F1-Score Total = mean(F1-Score-Nadir, F1-Score-Off-Nadir, F1-Score-Very-Off-Nadir)

Collection Details

| Catalog ID | Pan Resolution (m) | Off Nadir Angle (deg) | Target Azimuth (deg) | Category | |

| 1 | 1030010003D22F00 | 0.48 | 7.8 | 118.4 | Nadir |

| 2 | 10300100023BC100 | 0.49 | 8.3 | 78.4 | Nadir |

| 3 | 103001000399300 | 0.49 | 10.5 | 148.6 | Nadir |

| 4 | 1030010003CAF100 | 0.48 | 10.6 | 57.6 | Nadir |

| 5 | 1030010002B7D800 | 0.49 | 13.9 | 162 | Nadir |

| 6 | 10300100039AB000 | 0.49 | 14.8 | 43 | Nadir |

| 7 | 1030010002649200 | 0.52 | 16.9 | 168.7 | Nadir |

| 8 | 1030010003C92000 | 0.52 | 19.3 | 35.1 | Nadir |

| 9 | 1030010003127500 | 0.54 | 21.3 | 174.7 | Nadir |

| 10 | 103001000352C200 | 0.54 | 23.5 | 30.7 | Nadir |

| 11 | 103001000307D800 | 0.57 | 25.4 | 178.4 | Nadir |

| 12 | 1030010003472200 | 0.58 | 27.4 | 27.7 | Off-Nadir |

| 13 | 1030010003315300 | 0.61 | 29.1 | 181 | Off-Nadir |

| 14 | 10300100036D5200 | 0.62 | 31 | 25.5 | Off-Nadir |

| 15 | 103001000392F600 | 0.65 | 32.5 | 182.8 | Off-Nadir |

| 16 | 1030010003697400 | 0.68 | 34 | 23.8 | Off-Nadir |

| 17 | 1030010003895500 | 0.74 | 37 | 22.6 | Off-Nadir |

| 18 | 1030010003832800 | 0.8 | 39.6 | 21.5 | Off-Nadir |

| 19 | 10300100035D1B00 | 0.87 | 42 | 20.7 | Very Off-Nadir |

| 20 | 1030010003CCD700 | 0.95 | 44.2 | 20 | Very Off-Nadir |

| 21 | 1030010003713C00 | 1.03 | 46.1 | 19.5 | Very Off-Nadir |

| 22 | 10300100033C5200 | 1.13 | 47.8 | 19 | Very Off-Nadir |

| 23 | 1030010003492700 | 1.23 | 49.3 | 18.5 | Very Off-Nadir |

| 24 | 10300100039E6200 | 1.36 | 50.9 | 18 | Very Off-Nadir |

| 25 | 1030010003BDDC00 | 1.48 | 52.2 | 17.7 | Very Off-Nadir |

| 26 | 1030010003CD4300 | 1.63 | 53.4 | 17.4 | Very Off-Nadir |

| 27 | 1030010003193D00 | 1.67 | 54 | 17.4 | Very Off-Nadir |

Citation Instructions

Weir, N., Lindenbaum, D., Bastidas, A., Etten, A.V., McPherson, S., Shermeyer, J., Vijay, V.K., & Tang, H. (2019). SpaceNet MVOI: A Multi-View Overhead Imagery Dataset. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), 992-1001.

License

The SpaceNet Dataset by SpaceNet Partners is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

The commercialization of the geospatial industry has led to an explosive amount of data being collected to characterize our changing planet. One area for innovation is the application of computer vision and deep learning to extract information from satellite imagery at scale. CosmiQ Works, Radiant Solutions and NVIDIA have partnered to release the SpaceNet data set to the public to enable developers and data scientists to work with this data.

The commercialization of the geospatial industry has led to an explosive amount of data being collected to characterize our changing planet. One area for innovation is the application of computer vision and deep learning to extract information from satellite imagery at scale. CosmiQ Works, Radiant Solutions and NVIDIA have partnered to release the SpaceNet data set to the public to enable developers and data scientists to work with this data. The commercialization of the geospatial industry has led to an explosive amount of data being collected to characterize our changing planet. One area for innovation is the application of computer vision and deep learning to extract information from satellite imagery at scale. CosmiQ Works, Radiant Solutions and NVIDIA have partnered to release the SpaceNet data set to the public to enable developers and data scientists to work with this data.

The commercialization of the geospatial industry has led to an explosive amount of data being collected to characterize our changing planet. One area for innovation is the application of computer vision and deep learning to extract information from satellite imagery at scale. CosmiQ Works, Radiant Solutions and NVIDIA have partnered to release the SpaceNet data set to the public to enable developers and data scientists to work with this data. The commercialization of the geospatial industry has led to an explosive amount of data being collected to characterize our changing planet. One area for innovation is the application of computer vision and deep learning to extract information from satellite imagery at scale. CosmiQ Works, Radiant Solutions and NVIDIA have partnered to release the SpaceNet data set to the public to enable developers and data scientists to work with this data.

The commercialization of the geospatial industry has led to an explosive amount of data being collected to characterize our changing planet. One area for innovation is the application of computer vision and deep learning to extract information from satellite imagery at scale. CosmiQ Works, Radiant Solutions and NVIDIA have partnered to release the SpaceNet data set to the public to enable developers and data scientists to work with this data.